Terraform Tips: How to Recover Your Deployment From a Terraform Apply Crash

Kosseila Hd

Feb 27, 2023 8:45:00 AM

– “Because sh*t happens, be ready for when it does!” —

Infrastructure Automation is a lifesaver for OPS teams in their day-to-day duties, but when it comes to giving full control to new tools and frameworks, watch out for problems that can no longer be fixed manually. It’s also a reminder that it is easier to create a mess than to clean it.

Today, we’ll show you how to recover from a failed Terraform application when half of the resources have already been provisioned, but an error occurred in the middle of the deployment. This can be especially challenging if you have a large number of resources, and you can’t destroy them either in the console or through Terraform destroy. Not to mention all the $$ that your Cloud will keep charging for the unusable resources, yikes!

How did I get here? It was not even my code, I was actually just minding my business trying to spin up a Fortinet Firewall in Oracle Cloud using their reference architecture (Oracle-QuickStart).

Here’s a link to the GitHub repo I used but the code is not relevant at all. These config errors can happen anytime.

https://github.com/oracle-quickstart/oci-fortinet/drg-ha-use-case

The scenario is simple:

Clone the GitHub Repository that contains the Terraform configuration

Adjust the authentication parameters such as your API keys for the OCI environment etc

Run the init command

Run the Terraform plan command to make sure everything looks good

$ cd ~/forti-firewall/oci-fortinet/use-cases/drg-ha-use-case

$ terraform init

$ terraform -v

Terraform v1.0.3

+ provider registry.terraform.io/oracle/oci v4.105.0

+ provider registry.terraform.io/hashicorp/template v2.2.0

$ Terraform plan

…

Plan: 66 to add, 0 to change, 0 to destroy.

All the plan checks come back positive, we’re ready to go! let’s focus on the apply

$ terraform apply -–auto-approve

oci_core_drg.drg: Creating...

oci_core_vcn.web[0]: Creating...

oci_core_app_catalog_listing_resource_version_agreement.mp_image_agreement[0]: Creating...

oci_core_vcn.hub[0]: Creating...

oci_core_vcn.db[0]: Creating...

oci_core_drg_attachment.db_drg_attachment: Creation complete after 14s oci_core_drg_route_distribution_statement.firewall_drg_route_distribution_statement_two: Creating... oci_core_drg_route_distribution_statement.firewall_drg_route_distribution_statement_one: Creation complete after 1s

oci_core_drg_route_distribution_statement.firewall_drg_route_distribution_statement_two: Creation complete after 1s

oci_core_drg_route_table.from_firewall_route_table: Creation complete after 23s [id=ocid1.drgroutetable.oc1.ca-toronto-1.aaaaaaaag3ohsxxxxxxxxxxxxnykq]

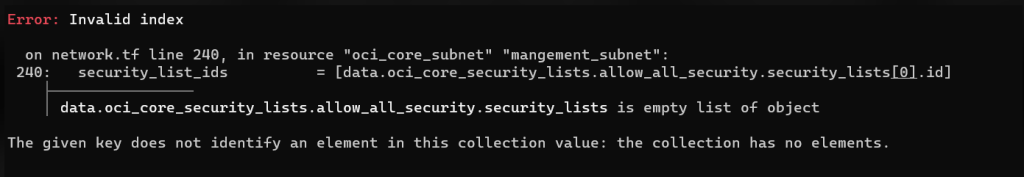

│ Error: Invalid index │ │ on network.tf line 240, in resource "oci_core_subnet" "mangement_subnet": │ 240: security_list_ids = [data.oci_core_security_lists.allow_all_security.security_lists[0].id] │├──────────────── ││ data.oci_core_security_lists.allow_all_security.security_lists is empty list of object │ The given key does not identify an element in this collection value: the collection │has no elements.

...

SURPRISE!

Despite our successful Terraform plan, our deployment was halted a few minutes later due to the below error

Now, I want to fix my code issue, a clean wipeout is the only way to go (terraform destroy), but is it possible?

Not even close:

The deployment stopped halfway through with blocking errors

It’s really stuck, as we can’t terraform destroy to undo our changes, nor can we proceed further

The plan is a mess because the dependency is now a mess (data source element empty etc.)

The section below, explains why

1. Terraform Destroy: Doesn’t work, still getting the same data source error so it’s stuck

$ terraform destroy --auto-approve

oci_core_vcn.hub[0]: Refreshing state... [id=ocid1.vcn.oc1.ca-toronto-1xxx]

oci_core_network_security_group_security_rule.rule_ingress_all: Refreshing state...

[id=F44A50]

oci_core_network_security_group_security_rule.rule_egress_all: Refreshing state...

[id=B14C98]

...

│ Error: Invalid index

│

│on network.tf line 240, in resource "oci_core_subnet" "mangement_subnet":

│240: security_list_ids = [data.oci_core_security_lists.allow_all_security.security_lists[0].id]

│├──────────────── │

│ data.oci_core_security_lists.allow_all_security.security_lists is empty list of object

…2 more occurrence of the same error on 2 other security lists data source

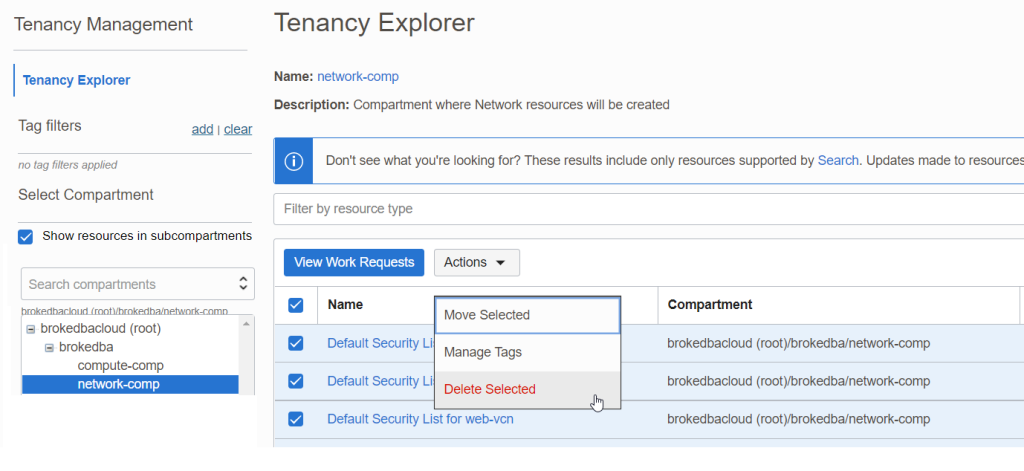

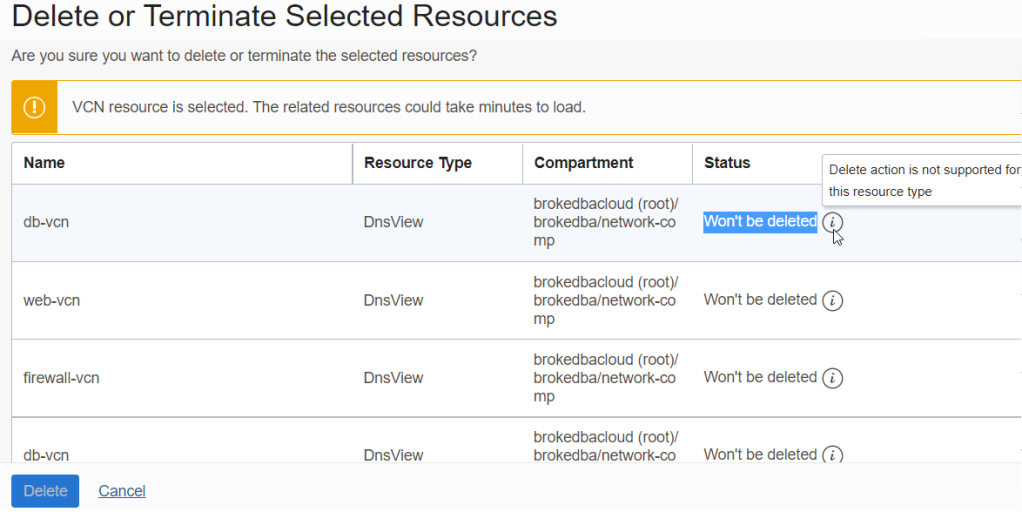

2. Destroy from the Console: Same, resources can’t be destroyed no matter what order I chose.

I used the super handy OCI Tenancy Explorer which was suggested to me by Suraj Ramesh on Twitter

The OCI Console kept showing “Won’t be deleted” and the delete button was even grayed out due to dependency.

All right so the situation is clear it’s a stalemate, but we can already infer the below conclusions

Fetching an empty list from a data source in a resource block can cause a deployment to fail miserably

`Terraform Plan` could never detect such errors because it can only be known during the apply

Terraform doesn’t have a failsafe mode that allows recovering when such a buggy thing happens

Data Sources are to be used with caution 🙂

— “Time to switch to the hacking mode!” —

After hours of toiling to find a way to get outta this jam, I understood I wouldn’t be able to fix the terraform error while I was mired in the swamp. I had to find a way to get back to square one, which led me to the below fix.

ALL YOU CAN TAINT:

“terraform taint” is a command used to mark a resource as ‘tainted’ in the state file. This will force the next application to destroy and recreate the resource. This is cool but to taint all the resources created so I came up with a bulk command using a state list to do that (to taint 33 resources.)

$ terraform state list | grep -v ^data | xargs -n1 terraform taint

Resource instance oci_core_drg.drg has been marked as tainted.

Resource instance oci_core_drg_attachment.db_drg_att has been marked as tainted.

…

Resource instance oci_core_vcn.db[0] has been marked as tainted. Resource instance oci_core_vcn.hub[0] has been marked as tainted. Resource instance oci_core_vcn.web[0] has been marked as tainted. Resource instance oci_core_volume.vm_volume-a[0] has been marked as tainted. Resource instance oci_core_volume.vm_volume-b[0] has been marked as tainted.

...33 resources tainted in total

This bulk taint allows doing clean up all the created resources through terraform destruction after an implicit refresh.

$ terraform destroy --auto-approve

oci_core_drg.drg: Refreshing state... [id=ocid1.xxx] oci_core_vcn.hub[0]: Refreshing state... [id=ocid1.vcn.oc1.ca-toronto-1.xxx]

...

Plan: 0 to add, 0 to change, 33 to destroy.

oci_core_route_table.ha_route_table[0]: Destroying

...

Destroy complete! Resources: 33 destroyed.

Having all resources wiped out by Terraform, we can now begin anew with a clean slate.

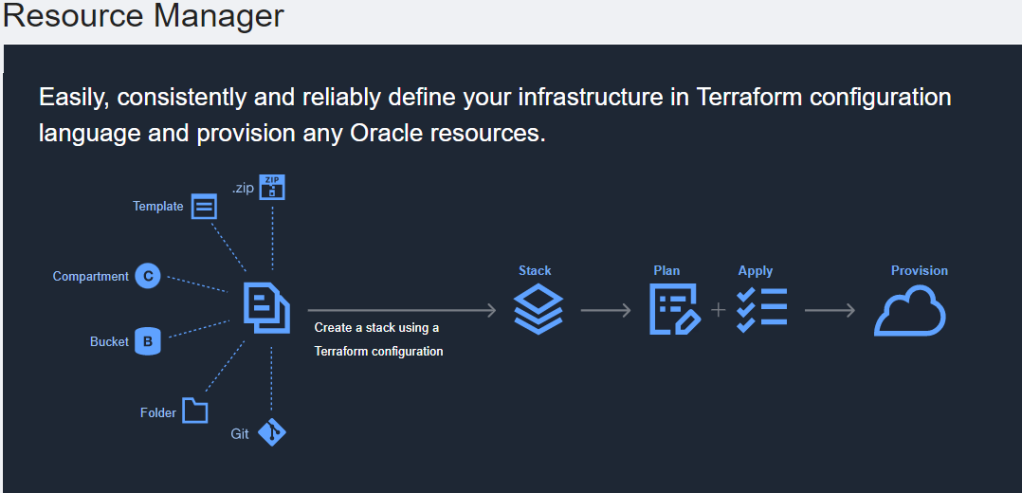

Resource Manager is an OCI Solution that enables users to manage, provision, and govern their cloud resources.

It has a unified view of all deployed resources using Terraform under the hood. A deployment is called a stack where we can load our terraform configuration to automate and orchestrate deployments (plan-apply-destroy).

This makes sense if you want to keep your deployment configurations centralized in your cloud.

The used repository had even a link to deploy it from OCI Resource Manager as shown above

Note: Other Cloud providers offer similar services but they have their own IaC Language which is not Terraform.

Solution: Exporting the State and Tainting

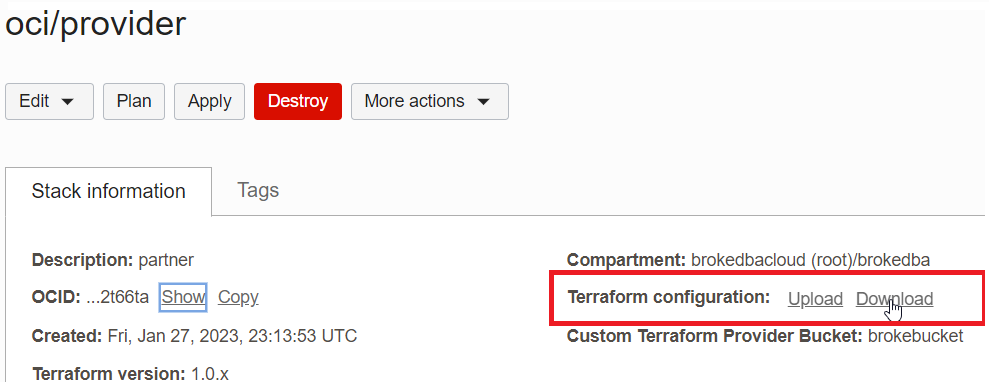

If you were running you terraform apply from the OCI Resources Manager. Then you would have to:

1. Download the configuration from RM (or clone from GitHub)

2. Import the state file directly from RM see below (Import State)

Important: Load both the Terraform configuration(unzipped) and the state file in the same directory

3. Taint your resources: after an init and refresh all resources in the cloud are visible you can start tainting them

$ cd ~/exported_location/drg-ha-use-case

$ terraform init

$ terraform refresh

$ terraform state list | grep -v ^data | xargs -n1 terraform taint

... # all resources are now tainted

# Destroy the resources

$ terraform destroy --auto-approve

Destroy complete! Resources: 33 destroyed.

We just learned how to quickly remediate a Terraform Deployment that got stuck due to a blocking error

Although terraform doesn’t have a failsafe mode, we can still leverage `taint` in similar failure cases

I also got to code review 🙂 the third party terraform configs (opened and answered 2 issues for this stack)

Before loading your deployment into Resource Manager, It’s important to deploy/test it locally first (better for troubleshooting .i.e taint)

The logical error behind the failure? a mistake from the maintainers (wrong data source compartment)

`taint` is rather deprecated, HashiCorp recommends using the -replace option with Terraform apply

$ terraform apply -replace="aws_instance.example[0]”

Fill out the form below to unlock access to more Eclipsys blogs – It’s that easy!